I know how to write macro, what it's useful for, but can't seem to define it well when people ask me.

Rust book defines it as a way of writing code that writes other code, known as metaprogramming.

In Java you would write an annotation and annotation processor, in Scala you would also write a macro.

For me it's a code abstraction that I sometimes use to avoid repeating myself.

Macros often look cryptic, I try to use them sparingly, but sometimes macros are really useful.

What I like about macros in Rust is that Rust compiler is more permissive to content of a macro (I mean doesn't scream at me for types).

About my mini crate

I'll refactor a simple macro I wrote for reducing boilerplate at https://crates.io/crates/actix-json-responder. Actix json responder macro enables having less boilerplate code when implementing a converter from struct to json data type. Real simple!

I'm glad people seem to use it, and it's just maybe 20 lines of code.

It will be even less when I refactor it and I'll document the process here.

This kind of macro is a procedural derive type of macro. I won't go into detail what other kinds of macros are there, because rust book explains it really well.

extern crate proc_macro;

use proc_macro::TokenStream;

use syn::{parse_macro_input, DeriveInput};

/// Reduces boilerplate for actix view models

/// Note: type Error has to be in context ( define enum Error or import Error)

#[proc_macro_derive(JsonResponder)]

pub fn responder_derive(input: TokenStream) -> TokenStream {

let ast = parse_macro_input!(input as DeriveInput);

let struct_name = &ast.ident.to_string();

let generic_impl = "

impl actix_web::Responder for {} {

type Body = actix_web::body::BoxBody;

fn respond_to(self, _: &actix_web::HttpRequest) -> actix_web::HttpResponse<Self::Body> {

match serde_json::to_string(&self) {

Err(err) => actix_web::HttpResponse::from_error(err),

Ok(value) => actix_web::HttpResponse::Ok().content_type(\"application/json\").body(value)

}

}

}

";

generic_impl

.replace("{}", struct_name.as_str())

.parse()

.unwrap()

}This code is ugly, but then again it allows me to write something like:

#[derive(Serialize, Debug, Display, JsonResponder)]

#[serde(rename_all = "camelCase")]

pub struct MyResult {

running: bool,

description: Option<String>,

}And MyResult struct will convert to an application/json data type.

And that allows me to write an actix web handler which returns that struct e.g.

pub async fn get_my_result(

_req: HttpRequest

) -> Result<MyResult> {

todo!()

}On procedural derive macros

Procedural macros are affected by external imports or variable names, so you have to be careful when declaring something not to clash with implementing code. And also use fully qualified imports.

Procedural derive macros can be used in derive. Derive is a special kind of attribute which enables extending data structures like structs, enums and unions. And in the case of JsonResponder I'm extending the MyResult to implement a Responder trait for json data type.

As you can see from the code, my macro is just a raw string that gets filled with metadata. And on compile time, that piece of string gets injected in the code, although I don't see it, but rust compiler says it's there.

You'll see proc_macro_derive and parse_macro_input!(input as DeriveInput) on any kind of a procedural derive macro. First attribute indicates you're building a derive macro, and second one extracts metadata about the structure that implements the macro.

Refactor

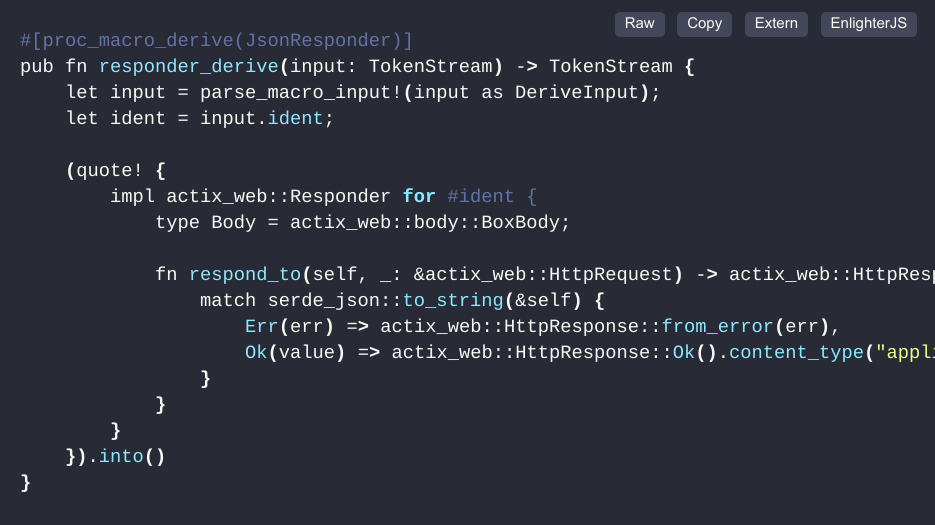

I managed to slightly reduce code with the help of quote crate. Quote turns syntax tree data structures into tokens of source code. It's not that much about reducing the code, but my solution with using string and replacing a string was ugly to me!

Doesn't this look better to read?

#[proc_macro_derive(JsonResponder)]

pub fn responder_derive(input: TokenStream) -> TokenStream {

let input = parse_macro_input!(input as DeriveInput);

let ident = input.ident;

(quote! {

impl actix_web::Responder for #ident {

type Body = actix_web::body::BoxBody;

fn respond_to(self, _: &actix_web::HttpRequest) -> actix_web::HttpResponse<Self::Body> {

match serde_json::to_string(&self) {

Err(err) => actix_web::HttpResponse::from_error(err),

Ok(value) => actix_web::HttpResponse::Ok().content_type("application/json").body(value)

}

}

}

}).into()

}

Whatever you define in scope, you can use inside quote with # prefix, like #ident.

Release a Crate

My usual steps would be:

- increase version in Cargo.toml

- commit a new version

cargo releasedry run and thencargo release --execute- create a new tag and new release, generate changelog

But handling it manually is prone to errors. I have a Github workflow called release that gets triggered on new tag.

Release workflow looks like this:

name: Release

# Controls when the action will run. Workflow runs when manually triggered using the UI

# or API.

on:

push:

tags:

- '*'

workflow_dispatch:

# A workflow run is made up of one or more jobs that can run sequentially or in parallel

jobs:

# This workflow contains a single job called "greet"

release:

name: Create github release

# The type of runner that the job will run on

runs-on: ubuntu-latest

# Steps represent a sequence of tasks that will be executed as part of the job

steps:

# Runs a single command using the runners shell

- name: Checkout code

uses: actions/checkout@v2

- uses: actions-rs/toolchain@v1

with:

toolchain: stable

- uses: actions-rs/cargo@v1

with:

command: clippy

args: --all-targets -- -D warnings

- uses: "marvinpinto/action-automatic-releases@latest"

with:

repo_token: "${{ secrets.GITHUB_TOKEN }}"

automatic_release_tag: "latest"

prerelease: true

title: "Development Build"

- uses: actions-rs/cargo@v1

with:

command: login

args: ${{ secrets.CRATES_TOKEN }}

- uses: actions-rs/cargo@v1

with:

command: publish

args: --dry-run

- uses: actions-rs/cargo@v1

with:

command: publishAs you can see, steps are checkout, lint, publish practice run and finally publish.

Conventional Commits

One more thing I started using more is commitizen. It's a tool that enforces conventional commits, and that format is good as it's easy to inspect, and you can build automation on it, like generating a changelog etc. I used it before on my node projects, but there's no real reason why it couldn't be used on any type of project.